MIT Disavows Doctoral Student Paper on AI’s Productivity Benefits

MIT has distanced itself from a research paper authored by a doctoral student, citing concerns over the “integrity” of the findings. The paper, which explored the effects of artificial intelligence on research and innovation, is now being withdrawn from public discourse at the university’s request.

The Contentious Research Paper

The paper, titled “Artificial Intelligence, Scientific Discovery, and Product Innovation,” was authored by Aidan Toner-Rodgers, a doctoral student in MIT’s economics program. The research suggested that introducing an AI tool in a materials science lab led to increased material discoveries and patent filings. However, it also noted a decline in researchers’ job satisfaction.

Initial Praise and Subsequent Concerns

Initially, the paper garnered praise from prominent MIT economists, including Nobel laureate Daron Acemoglu and David Autor. Autor, in particular, expressed being “floored” by the findings. Both economists highlighted the paper's relevance in the ongoing discussions about AI and its impact on science.

However, their stance shifted dramatically. In a recent statement, Acemoglu and Autor expressed a complete lack of confidence in the “provenance, reliability, or validity of the data and in the veracity of the research.” This reversal prompted MIT’s decision to disavow the paper.

The Investigation and Its Aftermath

Concerns about the paper's integrity were first raised by a computer scientist with expertise in materials science, who approached Acemoglu and Autor in January. This led to an internal review by MIT.

Due to student privacy laws, MIT has not disclosed the specific results of the review. However, it has been confirmed that the paper’s author, Aidan Toner-Rodgers, is “no longer at MIT.”

Efforts to Withdraw the Paper

MIT has formally requested the withdrawal of the paper from The Quarterly Journal of Economics, where it was under consideration for publication. Additionally, the university has sought its removal from arXiv, a preprint server. While typically, only the authors can request a withdrawal from arXiv, MIT stated that “to date, the author has not done so.”

Key Takeaways and Implications

This incident raises several critical questions about the use of AI in research and the importance of data integrity. Here are some key points to consider:

- Data Integrity: The core issue revolves around the reliability and validity of the data used in the research. This underscores the necessity for rigorous data verification processes in AI-related studies.

- Peer Review: Despite initial positive reception, the concerns raised highlight the importance of thorough peer review to identify potential flaws or inaccuracies in research.

- Ethical Considerations: The incident touches on the ethical responsibilities of researchers when using AI tools, including transparency and accountability in data collection and analysis.

- Impact on AI Research: This event may lead to increased scrutiny of AI's role in scientific research, potentially influencing future studies and methodologies.

Broader Context: AI in Scientific Research

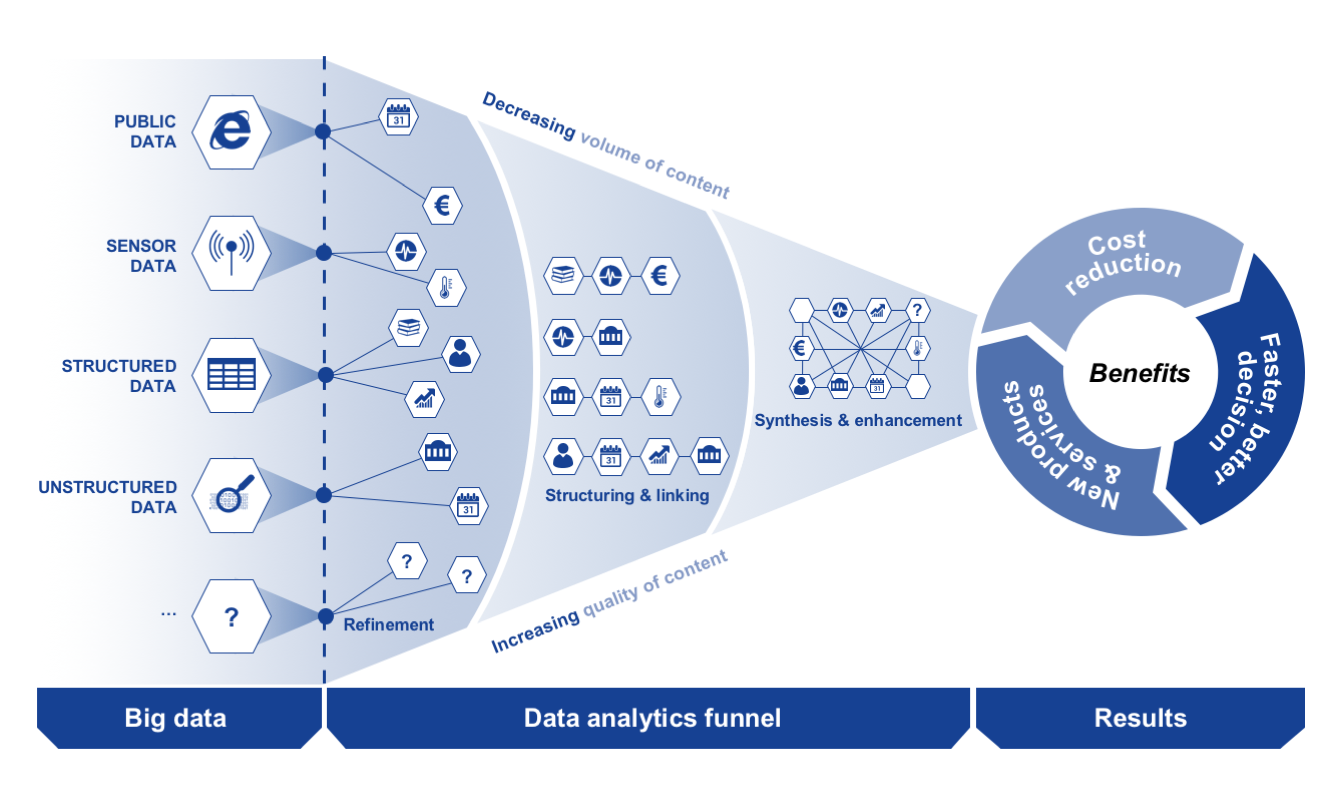

The use of AI in scientific research is rapidly expanding, offering new avenues for discovery and innovation. However, this incident serves as a reminder of the potential pitfalls and the need for careful oversight.

The Promise of AI in Science

AI tools can analyze vast datasets, identify patterns, and accelerate the pace of research in various fields. For example:

- Drug Discovery: AI algorithms can predict the efficacy of drug candidates, reducing the time and cost associated with traditional drug development.

- Materials Science: AI can assist in designing new materials with specific properties, leading to breakthroughs in engineering and technology.

- Climate Modeling: AI can improve the accuracy of climate models, helping scientists better understand and predict the effects of climate change.

The Challenges and Risks

Despite the potential benefits, the integration of AI in scientific research also presents several challenges:

- Data Bias: AI algorithms are only as good as the data they are trained on. Biased data can lead to skewed results and inaccurate conclusions.

- Lack of Transparency: The “black box” nature of some AI models can make it difficult to understand how they arrive at their conclusions, raising concerns about accountability.

- Over-Reliance on AI: Researchers may become overly dependent on AI tools, potentially overlooking important nuances or alternative explanations.

- Security Risks: AI systems can be vulnerable to cyberattacks, which could compromise the integrity of research data.

Expert Opinions and Industry Reactions

The disavowal of the paper has sparked considerable discussion within the academic and tech communities. Experts emphasize the need for a balanced approach to AI in research, combining its capabilities with human oversight and critical thinking.

Daron Acemoglu’s Perspective

As a leading economist and Nobel laureate, Daron Acemoglu’s initial support for the paper lent significant credibility to its findings. His subsequent retraction underscores the seriousness of the data integrity concerns. Acemoglu has been a vocal advocate for responsible AI development, emphasizing the importance of ethical considerations and societal impact.

David Autor’s Insights

David Autor, another respected economist at MIT, has also played a key role in shaping the discourse around AI and its effects on the labor market. His initial enthusiasm for the paper highlights the potential for AI to drive productivity gains. However, his later concerns reflect a commitment to upholding rigorous research standards.

The Future of AI in Academic Research

The incident involving the MIT doctoral student’s paper serves as a valuable lesson for the academic community. It highlights the importance of maintaining high standards of research integrity, even when using advanced AI tools.

Recommendations for Responsible AI Research

To ensure the responsible use of AI in academic research, the following recommendations should be considered:

- Implement Robust Data Validation Processes: Researchers should employ rigorous methods to verify the accuracy and reliability of their data.

- Promote Transparency in AI Methodologies: Researchers should clearly document the AI algorithms and techniques used in their studies, allowing for greater scrutiny and understanding.

- Encourage Interdisciplinary Collaboration: Collaboration between AI experts and domain specialists can help ensure that AI tools are used appropriately and effectively.

- Establish Ethical Guidelines for AI Research: Universities and research institutions should develop clear ethical guidelines for the use of AI in research, addressing issues such as data privacy, bias, and accountability.

- Provide Training and Education: Researchers should receive training on the responsible use of AI tools, including how to identify and mitigate potential risks.

Conclusion

The disavowal of the AI research paper at MIT underscores the critical importance of data integrity and ethical considerations in the age of artificial intelligence. While AI offers tremendous potential for advancing scientific discovery and product innovation, it must be approached with caution and a commitment to rigorous research standards. By implementing robust data validation processes, promoting transparency, and fostering interdisciplinary collaboration, the academic community can harness the power of AI while mitigating its potential risks.