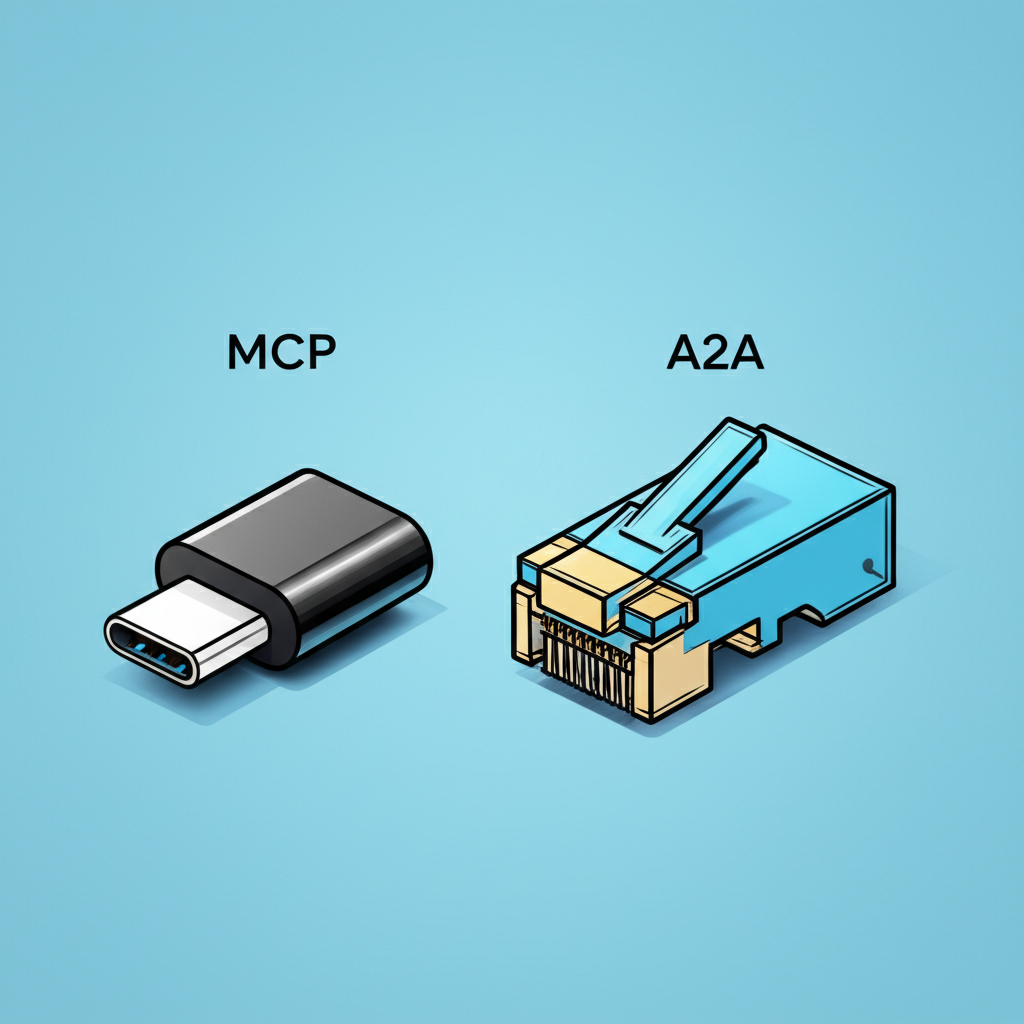

AI Agent Protocols: Why MCP is the USB-C and A2A is the Ethernet

In the rapidly evolving landscape of artificial intelligence, the concept of 'agents' is gaining significant traction. These are not merely passive models responding to prompts, but systems designed to interpret information, make decisions, and execute tasks, often autonomously. As these agents become more sophisticated and numerous, a fundamental challenge emerges: how do they communicate effectively, not only with the external world of data and tools but also with each other? Just as the digital age necessitated standards for device connectivity (like USB) and network communication (like Ethernet), the age of agentic AI demands its own set of protocols.

The need for standardized communication is clear. Without agreed-upon methods for exchanging information, describing capabilities, and managing interactions, the potential of AI agents remains limited, confined to isolated systems or requiring cumbersome, bespoke integrations. The development of common protocols is a crucial step towards building complex, collaborative agentic systems that can tackle more challenging problems and integrate seamlessly into existing technological infrastructures.

The Rise of AI Agent Protocols

It was only a matter of time before the first protocols governing agentic AI began to surface. Among the early and more recognizable is Anthropic's Model Context Protocol (MCP). Introduced with the goal of enhancing the capabilities of large language models, MCP provides a structured way for models to interact with external resources. Think of databases, APIs, or specific software functions – MCP aims to be the universal connector, making it easier for an AI model to access and utilize these tools effectively.

MCP's design facilitates the connection of AI systems with data sources and tools, enabling them to perform actions beyond generating text. This capability is fundamental to creating agents that can, for instance, query a database, send an email, or interact with a cloud service. However, this increased connectivity also introduces new complexities and potential vulnerabilities, as highlighted by instances of security threats and data leakage associated with early implementations.

While MCP has garnered attention and industry backing, it is not the sole player in the emerging field of AI protocols. At Google I/O, Google unveiled its own significant contribution: the Agent-to-Agent (A2A) protocol. On the surface, MCP and A2A might appear quite similar. Both employ client-server architectures and leverage common underlying technologies like JSON-RPC for messaging and HTTP or Server-Sent Events (SSE) for transport. Both have also quickly gained support from major players in the tech industry.

This superficial resemblance might lead some to believe we are witnessing a replay of historical format wars, like VHS versus Betamax, with two tech giants vying for dominance in AI communication standards. However, a closer examination reveals that MCP and A2A are designed to address fundamentally different aspects of the agentic AI ecosystem. Much of the confusion stems from the still-evolving and sometimes vague definition of what constitutes an 'AI agent' itself.

Defining the AI Agent

Before diving deeper into the protocols, let's clarify what we mean by an AI agent. At its core, an AI agent is typically a system that incorporates a decision-making component, often powered by a large language model or other AI model. This model is responsible for interpreting inputs, understanding goals, and deciding on a course of action. Crucially, agents are often equipped with the ability to interact with their environment through a set of tools or functions.

These tools can be anything from simple calculators and web search functions to complex interfaces for interacting with enterprise software, databases, or physical systems. The agent uses its model to determine which tool is needed for a given task and how to use it. For example, a customer service agent might use a tool to look up order history in a database, while a data analysis agent might use a tool to run a statistical calculation or generate a report.

Consider a practical example: a logging agent designed to monitor system logs. This agent might use a tool to periodically poll an API endpoint where logs are collected. Its AI model analyzes these logs. If an anomaly is detected, the agent might then use another tool to automatically generate a support ticket in an issue tracking system for a human operator to review. The potential for such agents to automate repetitive or complex tasks is immense, and some foresee them potentially replacing certain human roles once they achieve sufficient reliability. Early trials, however, indicate that AI agents still have a significant way to go in terms of consistency and accuracy, with studies showing high failure rates on common office tasks. Nevertheless, the potential for efficiency gains is driving major tech companies to explore how agents could reshape workforces.

MCP: Connecting Models to the World (The USB-C Analogy)

This brings us back to the protocols designed to make these agents more capable. Anthropic's Model Context Protocol (MCP) is primarily focused on the interaction between an AI model and the external tools and data sources it needs to access. As we explored in detail previously, MCP aims to be a universal, open standard for this specific type of connection.

The core idea behind MCP is to provide a standardized interface through which an AI model can discover the capabilities of available tools and data sources, request information from them, and instruct them to perform actions. It abstracts away the specifics of how each tool works, presenting a unified way for the model to interact with a diverse ecosystem of external resources. This is why Anthropic describes MCP as being like USB-C for AI. Just as a single USB-C port can connect a laptop to a display, external hard drive, power source, and various peripherals using a common standard, MCP allows an AI model to connect to and utilize a wide array of external functionalities through a single, standardized protocol.

The protocol follows a standard client-server architecture. The AI model acts as the client, initiating requests, while the external tool or data source acts as the server, responding to requests and executing tasks. Communication is facilitated using JSON-RPC, a lightweight remote procedure call protocol encoded in JSON. This messaging is carried over various transport protocols, including Stdio, HTTP, or Server-Sent Events (SSE), depending on the specific requirements of the interaction and the tools involved.

The power of MCP lies in its ability to standardize the description and invocation of external functions. Tools expose their capabilities (what they do, what inputs they require, what outputs they produce) in a format that the AI model can understand via MCP. This allows developers to build new tools or wrap existing APIs and make them accessible to any MCP-compliant AI model without needing model-specific integrations for each tool. This interoperability is key to scaling agentic capabilities.

However, the implementation of such protocols is not without challenges. As mentioned earlier, connecting powerful AI models to external systems, especially those handling sensitive data, introduces security risks. A reported issue with an Asana feature leveraging MCP highlighted how vulnerabilities in the interaction layer could potentially lead to data leakage, underscoring the critical need for robust security measures and careful implementation when connecting AI agents to real-world systems.

Despite these challenges, MCP represents a significant step towards making AI models more functional and integrated into existing digital workflows. It provides a necessary layer of abstraction and standardization for tool use, which is a cornerstone of building capable AI agents. While other frameworks and methods, such as those found in libraries like LangChain, can also achieve similar functionality for tool interaction, MCP aims to establish a widely adopted, open standard for this specific purpose.

Here's a high-level look at MCP's architecture. Credit: modelcontextprotocol.io

A2A: Enabling Agent Collaboration (The Ethernet Analogy)

If MCP is focused on how an AI model talks to tools and data (like a computer talking to peripherals via USB), then Google's Agent-to-Agent (A2A) protocol is concerned with how AI agents talk to *each other*. This is where the Ethernet analogy comes into play. Ethernet is the foundational technology for local area networks, enabling multiple computers and devices to communicate and share resources. Similarly, A2A is designed to facilitate communication and collaboration among different AI agents within a system or across a network.

The core premise behind A2A is the belief that the most powerful and useful agentic systems will not be monolithic entities but rather compositions of multiple, more specialized agents working together. Imagine a system where one agent specializes in data retrieval, another in natural language understanding, a third in complex calculations, and a fourth in interacting with a specific software application. For such a system to function effectively, these agents need a standardized way to discover each other, understand what tasks they can delegate, pass information back and forth, and track the progress of collaborative efforts.

A2A provides this framework for inter-agent communication. Like MCP, it utilizes JSON-RPC for messaging and supports HTTP and SSE as transport protocols. However, its communication flow is tailored specifically for agent-to-agent interactions.

The A2A Communication Flow

The typical A2A interaction follows a structured process:

- Discovery: Agents within a system first need to become aware of each other's existence and capabilities. This phase involves agents exchanging information, akin to swapping business cards. These 'agent cards' contain essential details such as the agent's name, a description of its functions, network addresses for communication, and authentication methods. They also detail optional features supported, like streaming data via SSE, and the types of data formats (text, images, audio, video, structured data) the agent can process or produce.

- Task Creation: Once agents are aware of each another, one agent (acting as the client) can send a task request to another agent (acting as the server). The task request specifies what the client agent needs the server agent to do, providing necessary inputs or parameters.

- Task Acknowledgment and Tracking: Upon receiving a task, the server agent sends back an acknowledgment message to the client, including a unique task ID. This ID is crucial for tracking the progress of the task, especially for operations that might take a significant amount of time to complete.

- Progress Updates: For long-running tasks, the client agent needs a way to monitor the server agent's progress. A2A supports two primary methods for this: polling, where the client periodically queries the server for updates, and Server-Sent Events (SSE), where the server can proactively push updates to the client as the task progresses, provided both agents support SSE. This allows the server agent to inform the client about milestones, intermediate results, or even flag missing information (e.g., needing a user's email address or a support ticket's severity level) required to continue the task.

- Result Delivery (Artifacts): Once the task is completed, the server agent sends the result back to the client. A2A refers to these results as 'artifacts'. An artifact can be complex, containing various components such as plain text, structured JSON data, images, or other media, depending on the nature of the task and the output produced by the server agent.

Returning to our logging agent example, after detecting an anomaly and creating a support ticket (perhaps using MCP to interact with the ticketing system API), the logging agent might then use A2A to send this ticket information as a task to a separate diagnostic agent. The diagnostic agent, acting as the server, would receive the ticket artifact, perform its analysis (potentially using its own tools via MCP), and then send back a new artifact via A2A indicating whether the issue can be resolved automatically or requires human intervention.

The flexibility of A2A lies in the fact that the client and server roles are not fixed. An agent can be a client for one interaction (requesting a diagnostic analysis) and a server for another (responding to a request for log data from a different agent). This dynamic role-playing is essential for building complex, multi-agent workflows.

Here's a breakdown of how Google's A2A Protocol works.

MCP and A2A: Complementary, Not Competing

Understanding the distinct purposes of MCP and A2A reveals that they are not necessarily competing standards like VHS and Betamax, but rather complementary protocols addressing different layers of the agentic AI stack. MCP focuses on the 'vertical' connection between an AI model and the external world of tools and data. A2A focuses on the 'horizontal' connection between different AI agents.

An agent might use MCP to interact with a database or an API to gather information or perform a specific action. That same agent might then use A2A to communicate with another agent that specializes in, say, summarizing information, generating reports, or interacting with a different part of the system. A complex agentic workflow could easily involve multiple agents, each using MCP to interact with their specialized tools and A2A to coordinate their efforts and pass results between them.

The analogy holds: USB-C connects your computer to its peripherals (external drives, monitors, etc.), while Ethernet connects your computer to other computers and resources on a network. You need both for a fully functional computing environment. Similarly, you might need both MCP and A2A (or protocols like them) for a fully functional, collaborative agentic AI system.

Industry Backing and the Path to Standardization

Both MCP and A2A have quickly gained significant support from major players in the tech industry. Anthropic's MCP has seen adoption among companies building tools and services designed to be integrated with AI models. Google's A2A, since its unveiling, has also attracted broad support from a diverse group of companies, including model builders, cloud providers, and software vendors such as Accenture, Arize, Atlassian, Workday, and Intuit, among many others.

A significant development for A2A occurred in June when Google announced its intention to donate the protocol to the Linux Foundation. This move, backed by companies like Amazon, Microsoft, Cisco, Salesforce, SAP, and ServiceNow, signals a strong push towards making A2A an open, community-driven standard. Donating a technology to a neutral body like the Linux Foundation is often seen as a way to encourage broader adoption and collaboration across the industry, mitigating concerns that the protocol might be tied to a single vendor's ecosystem.

The push for standardization in AI agent communication is a positive sign for the future of agentic systems. Standards reduce fragmentation, lower the barrier to entry for developers, and foster interoperability, allowing different agents and tools from various vendors to work together seamlessly. This is essential for building the complex, multi-agent applications that are envisioned for the future.

However, the path to establishing widely adopted standards in a field as dynamic as AI is challenging. The AI ecosystem is still in its infancy, with rapid advancements occurring constantly. New models, capabilities, and agentic architectures are emerging at a pace that can outstrip traditional standards bodies, which are often characterized by slower, more deliberate processes. Disagreements over design choices, feature sets, and governance can also slow down standardization efforts.

It is entirely possible, even likely, that as our understanding of agentic systems deepens and the technology matures, new standards and protocols will emerge. These might refine existing approaches, address new challenges, or cater to specific types of agents or applications. The landscape of AI communication protocols is far from settled, and the evolution will be driven by real-world usage, developer feedback, and the ongoing innovation in AI research.

The Future of Agentic Communication

The development and adoption of protocols like MCP and A2A are critical steps towards realizing the full potential of AI agents. By providing standardized ways for agents to interact with tools, data, and each other, these protocols enable the creation of more capable, reliable, and complex agentic systems. Such systems hold the promise of automating tedious tasks, assisting humans with complex problem-solving, and unlocking new levels of efficiency in various industries.

The distinction between MCP's focus on model-to-tool interaction and A2A's focus on agent-to-agent collaboration highlights the different layers of communication required in sophisticated agentic architectures. While an agent needs to effectively use external resources (MCP's domain), it also needs to be able to delegate tasks, share information, and coordinate with other agents (A2A's domain) to achieve larger goals.

The industry's rapid embrace of these protocols, culminating in the donation of A2A to the Linux Foundation, suggests a strong desire for open standards that can accelerate development and deployment of agentic AI. However, the journey is just beginning. The robustness, security, and scalability of these protocols will be tested as agentic systems move from research labs into production environments. The ongoing dialogue and collaboration within the AI community and standards bodies will be essential to navigate the challenges and build a truly interoperable and powerful ecosystem of AI agents.

Ultimately, the success of AI agents hinges not just on the intelligence of individual models, but on their ability to communicate and collaborate effectively within a larger system. Protocols like MCP and A2A are laying the groundwork for this interconnected future, defining the 'languages' that will allow AI agents to speak, listen, and work together to accomplish tasks that are currently beyond the reach of isolated AI systems. The question is no longer *if* agents will need to communicate, but *how*, and these emerging protocols offer compelling answers to that fundamental challenge.