The Growing Tide: Why Public Backlash Against Generative AI Is Strengthening

Before Duolingo, the popular language-learning app, temporarily wiped its videos from TikTok and Instagram in mid-May 2025, its social media presence was a masterclass in brand engagement. The quirky green owl mascot had achieved viral fame multiple times, becoming instantly recognizable, especially among younger demographics. It was a marketing success story that many other companies envied, demonstrating how a brand could connect authentically with its audience online.

However, this carefully cultivated public image took a significant hit when news emerged that Duolingo was transitioning to become an “AI-first” company. This strategic shift involved plans to reduce reliance on non-staff contractors by automating tasks that generative AI could handle. The reaction was swift and overwhelmingly negative.

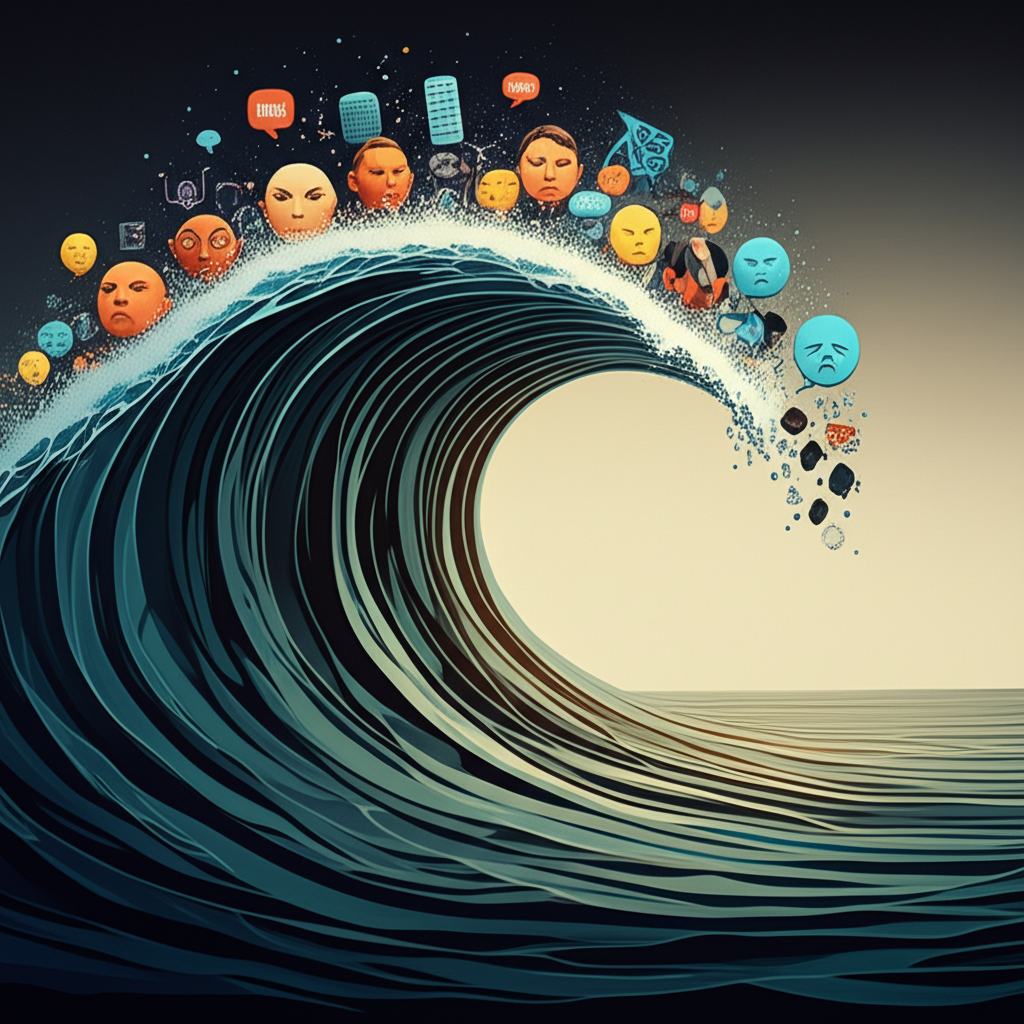

Young users, who had previously celebrated the brand, began posting performative videos of themselves deleting the app, often lamenting the loss of their hard-earned daily streaks. The comments section on Duolingo’s social media posts became a torrent of outrage, primarily focused on the perceived replacement of human workers with automation. This incident, while specific to one company, is indicative of a much larger, growing trend: a widespread public weariness and increasing resistance to the pervasive encroachment of generative AI into daily life and work.

From Awe to Animosity: The Shifting Public Mood

When generative AI tools like ChatGPT first burst onto the scene in late 2022, the initial reaction from many was one of wonder and excitement. The ability to generate text, images, and other media with simple prompts felt like a glimpse into a futuristic world. You could, for instance, easily create a cartoon image of a duck riding a motorcycle, a seemingly harmless and entertaining application of the technology.

However, the novelty quickly wore off for many as the implications of the technology became clearer. Artists were among the first to voice strong objections, pointing out that their visual and textual works were being scraped from the internet without permission or compensation to train these powerful AI systems. This fueled a growing pushback from the creative community, which intensified during the 2023 Hollywood writer's strike, where AI's potential impact on writing jobs was a key point of contention. The wave of copyright lawsuits brought by publishers, creative professionals, and even major Hollywood studios against AI companies further solidified the perception that generative AI posed a significant threat to creators' livelihoods and intellectual property.

Brian Merchant, author of Blood in the Machine, a book exploring the historical resistance of Luddites to worker-replacing technology, notes this shift in public sentiment. He observes a “new sort of ambient animosity towards the AI systems,” suggesting that AI companies have rapidly followed the typical Silicon Valley pattern of rapid disruption leading to public discontent. This trajectory, often characterized by prioritizing growth and technological advancement over social and ethical considerations, has quickly brought AI into conflict with broader societal values and concerns.

Data supports this observation. According to the Pew Research Center, the percentage of US adults more concerned than excited about increased AI usage in daily life jumped from 38 percent before ChatGPT's release to 52 percent by late 2023. This level of concern has remained relatively stable since, indicating a sustained public apprehension rather than a fleeting reaction.

The Multifaceted Critiques Fueling the Backlash

The backlash against generative AI is not monolithic; it stems from a confluence of compounding issues, many of which were highlighted by ethical AI researchers long before the current boom. These concerns, once confined largely to academic discussions and activist circles, have now entered mainstream public consciousness as AI tools become more ubiquitous.

Job Displacement and Worker Anxiety

Perhaps the most visceral fear driving public opposition is the potential for AI to replace human workers. High-profile statements from leaders at companies like Klarna and Salesforce about AI reducing the need for new hires in customer service and engineering roles have amplified these anxieties. The marketing of “agents” designed to automate software tasks further fuels the perception that the primary goal of AI adoption, from a corporate perspective, is cost reduction through labor cuts.

The Duolingo situation is a prime example of this tension. Despite the company's clarification that AI isn't replacing staff but rather contractors for automatable tasks, the public reaction focused intensely on the negative impact on workers. This highlights a broader empathy for those whose livelihoods are potentially threatened by automation, particularly in a precarious economic climate. Brian Merchant notes that “Workers are more intuitive than a lot of the pundit class gives them credit for... They know this has been a naked attempt to get rid of people.” This understanding is driving a significant part of the backlash, suggesting that future resistance will likely involve more organized labor responses.

The concern is particularly acute for recent college graduates and those seeking entry-level positions, who fear that AI automation could shrink the pool of available jobs, making it harder to start their careers.

Ethical Pitfalls: Bias, Errors, and Manipulation

Generative AI models are trained on vast datasets scraped from the internet, which often contain existing societal biases. As a result, AI outputs can perpetuate and even amplify harmful stereotypes across languages and cultures. This raises serious ethical questions about fairness and equity in applications ranging from hiring and loan applications to criminal justice.

Beyond bias, AI tools are also prone to generating error-ridden outputs or confidently presenting false information as fact (hallucinations). This unreliability erodes trust and raises concerns about the integrity of information in an AI-saturated world. The infamous example of Google's AI Overviews providing bizarre or dangerous advice underscores the risks of deploying unproven AI systems widely.

Alex Hanna, coauthor of The AI Con and director of research at the Distributed AI Research Institute, points to the public's reaction to encountering AI-generated content online. She notes how people are increasingly “trolling” or criticizing AI-generated content in comment sections, indicating a growing public awareness and rejection of inauthentic or flawed AI outputs. Even subtle cues, like the overuse of em dashes, are now being scrutinized as potential giveaways of AI-generated text.

Environmental Costs and Social Inequality

The immense computational power required to train and run large generative AI models comes with a significant environmental footprint. Data centers, essential infrastructure for AI, consume vast amounts of energy and water. This leads to increased environmental pollution, particularly when powered by fossil fuels.

Crucially, the burden of this environmental damage often falls disproportionately on marginalized communities. As Hanna points out, “Data centers are being located in these really poor areas that tend to be more heavily Black and brown.” This has led to local resistance and organizing efforts in rural communities and areas like Memphis, Tennessee, where residents are protesting the construction of data centers powered by polluting generators, such as the one planned by Elon Musk's xAI.

This environmental injustice intersects with broader concerns about AI exacerbating social and economic inequality. Shannon Vallor, a technology philosopher and author of The AI Mirror, contrasts the current AI era with the early internet. While the internet initially promised democratized access to information and opportunities, Vallor argues that generative AI seems primarily designed to benefit those already in positions of power and wealth. “Now, we have an era of innovation where the greatest opportunities the technology creates are for those already enjoying a disproportionate share of strengths and resources,” she states.

Intrusiveness and Annoyance in Daily Life

Beyond the major ethical and societal concerns, the sheer intrusiveness of AI into everyday digital experiences is also contributing to public fatigue and annoyance. Users report being constantly prompted with AI-generated questions on platforms like LinkedIn. Spotify listeners expressed frustration with AI-generated podcasts summarizing their listening habits. Even mundane encounters, like seeing AI-generated images on product packaging, are starting to grate on people's nerves.

This constant exposure, often without clear labeling or perceived benefit, contributes to a feeling of being subjected to AI rather than empowered by it. The suspicion of AI usage is now so high that even stylistic choices in writing can draw accusations of chatbot assistance.

Impact on Mental Health and Relationships

The concerns extend to personal well-being. Parents are worried about the impact of AI use on their child’s mental health, particularly concerning interactions with chatbots. There are also growing anxieties about chatbot addictions and how intense relationships with AI companions could drive a wedge in human relationships.

Organizing Resistance: From Online Outrage to Real-World Action

The frustration and concerns surrounding generative AI are not confined to online forums and comment sections. While social media provides a platform for expressing immediate outrage, the backlash is increasingly manifesting in real-world organizing and activism.

The protests against data centers in rural and marginalized communities are a clear example of this shift. Local residents are organizing to protect their environment and health from the pollution generated by the infrastructure required to power AI. This form of resistance is deeply rooted in tangible, immediate harms experienced by specific communities.

Similarly, the focus on job displacement is likely to fuel further organizing within labor movements. As more workers across different sectors feel threatened by automation, the potential for strikes, protests, and collective bargaining efforts specifically addressing AI's impact on the workforce will grow. The historical parallel to the Luddites, who resisted the introduction of machinery that threatened their livelihoods, is increasingly relevant in this context.

The Duolingo incident, despite the company's attempts at damage control through satirical videos and CEO explanations, demonstrates the difficulty companies face in navigating this new landscape of public skepticism. The continued criticism in the comments, even after explanations were provided, shows that the core concern – the replacement of human work with automation – remains a potent trigger for negative sentiment.

Conclusion: A Critical Juncture for AI Adoption

The initial hype surrounding generative AI is giving way to a more critical and often adversarial public stance. The backlash is being driven by a complex interplay of factors: fears of job loss, concerns about environmental damage and social inequality, ethical issues like bias and misinformation, challenges to creative industries through copyright infringement, and the simple annoyance of intrusive AI in daily life. Unlike the early internet, which felt empowering to many, the current phase of AI development is perceived by a growing number of people as primarily benefiting the powerful while creating new problems and anxieties for the rest.

As generative AI tools continue to proliferate, the pushback is likely to grow stronger and more organized. Companies and developers will need to address these concerns head-on, moving beyond simply deploying technology to considering its broader societal impact. The narrative around AI is shifting, and the coming years may see increased demands for regulation, ethical guidelines, and a more equitable approach to technological development, driven by a public that is increasingly ready to fight back against the perceived negative consequences of the AI revolution.